Last week the Horror Writers Association sent a draft artificial intelligence policy to members, inviting comments. The full text of the draft has not been made public. However, some discussion of it has spilled over into social media, primarily negative reactions about two areas, (1) whether writing produced with the assistance of the latest types of spellcheck would be disqualified from HWA awards, and (2) whether it is proper to allow works which would otherwise qualify but have been published in places that accept generative-AI art (for example) to compete for HWA awards.

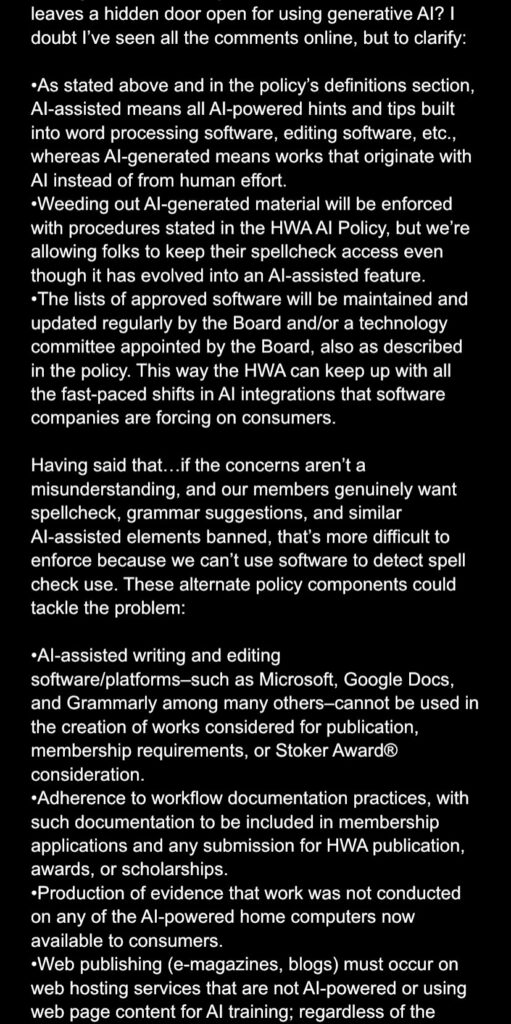

The draft policy distinguishes “AI-assisted” from “AI-generated”:

- AI-assisted means all AI-powered hints and tips built into word processing software, editing software, etc.

- AI-generated means works that originate with AI instead of human effort.

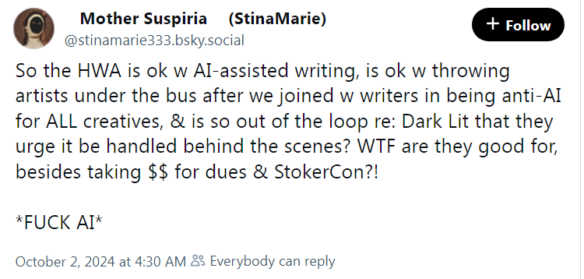

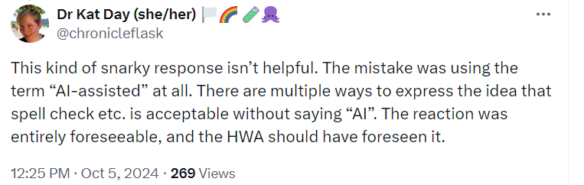

Here is some of the discussion about AI-assisted work. (Click for larger images.)

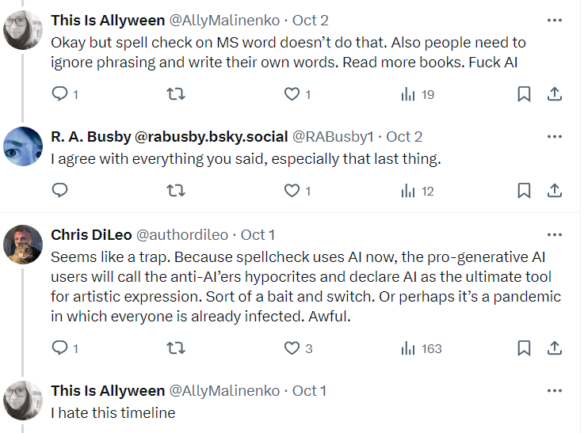

Here are some issues raised about work published in places that use AI-generated material, such as artwork.

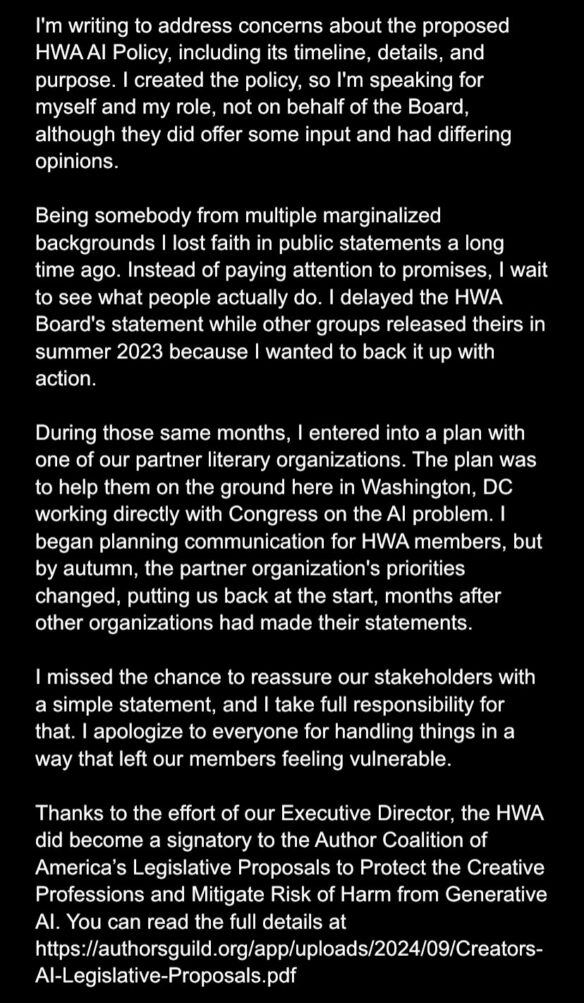

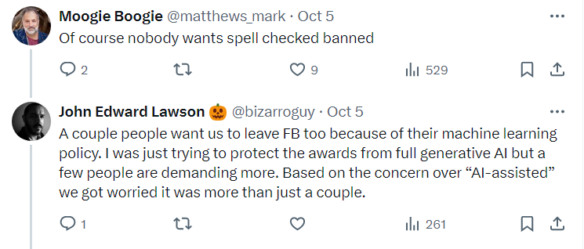

These comments led to a reply from Horror Writers Association President John Edward Lawson, which includes brief quotes from the draft policy.

Here’s an update about the proposed HWA AI Policy survey that went out earlier this week. This same message will be going out to HWA members as an iMailer in a little bit, but I wanted to ensure it was available publicly since there has been public discussion of it.

Dr. Kat Day rejected the introduction of spellcheck to the discussion.

Another thread responding to Lawson’s post included these comments:

Scott J. Moses, in a thread that starts here, characterized the Lawson statement this way:

Other comments in the past week:

Brian Keene made a comment in his newsletter about the exchanges in social media, while also announcing he is stepping down as a HWA Trustee

“Boy,” Jeff Strand said, grinning that sly grin he grins when he’s being mischievous (which is often), “the Brian Keene I’ve known for twenty years wouldn’t have bothered making a plea like that. He would have just cracked skulls.”

We were standing in my bookstore, and Jeff was referring to a post I’d made on social media, pleading with the HWA membership to be patient while the organization’s officers and trustees worked through the vagaries of making an all-encompassing statement regarding Artificial Intelligence. I’d been of a mind that we should just quickly and easily release a statement that embodied “SCREW A.I.” but I was just one person and one Trustee, and things are structured — as they should be — so that no one person has all the power. And particularly when you are registered as a nonprofit, the way HWA is — there are standards and practices that you have to follow. You can’t just “crack skulls”, particularly if you like and respect the folks you’re working with.

If you look back at my 25+ year career, I’ve always been about helping my fellow writers. I’m a big believer in what Robert DeNiro says in Brazil: “We’re all in this together.” But I’m better at doing that from the outside, with my own tools. And that toolbox is quite busy these days. Between my obligations to Scares That Care (which supports our community while helping others), Vortex Books & Comics (which supports our community), and the soon-returning The Horror Show with Brian Keene, my dance card is full when it comes to helping my fellow writers. Add to that my own writing career, and my obligations to my wife, my sons, and my step-daughter, and to my aging parents… there’s a lot to juggle.

It is clear to me that I am much better at helping from the outside than I am from the inside. HWA, and any similar organization, is only as good as its volunteers, and such a role requires focus and devotion — something that’s hard to pledge if one is also doing a million other things. I can better serve you, my peers, by focusing my energies and talents into those things. Including this.

And so, while it’s been an honor to serve as an HWA Trustee, I’m stepping down due to those other obligations and commitments. My thanks and respect to the other Trustees and officers, and to the membership for allowing me to serve.

Keep writing, and Screw A.I.

Mary SanGiovanni’s wide-ranging discussion of ideas for the future of the HWA, “On the HWA”, said this about AI:

…I speak for myself here and this is not meant to reflect negatively or detrimentally in any way on anyone who is involved in class-action lawsuits (disclaimer, just in case), but I support those lawsuits and I believe a writing organization should absolutely, positively, 100% be against any AI usage which undercuts or eliminates job opportunities for writers, uses writers’ content without permission or payment, or performs not as a transcription aid or tool but as a substitution for the creativity, heart, blood, sweat, and tears that writers devote hours, days, months, and years of their lives to developing and perfecting over their lifetimes….

SFPA AND SFWA POSITIONS. Two other genre organizations that have already developed a policy or issued official comments are the Science Fiction and Fantasy Poetry Association (SPFA), and the Science Fiction and Fantasy Writers Association (SFWA). The links take you to the full discussion.

- SFPA Announces Generative AI Policy (File 770)

The organization will not accept or publish poetry, art, or other works created using a generative tool, either wholly or in part, and that published works created using a generative tool will not be eligible for SFPA awards, including the Rhysling, Elgin, and SFPA Poetry Awards….

- SFWA Comments on AI to US Copyright Office (SFWA Blog)

On October 30, the SFWA Board and the SFWA Legal Affairs Committee sent the following letter to the US Copyright Office in response to their August 2023 Notice of Inquiry regarding copyright law and policy issues in artificial intelligence, which is part of their AI Initiative.

We are aware that there is a wide range of opinion on the subject within our community, but the issues of known damage to fiction marketplaces and threats to original IP copyrights that these new AI tools pose must be made known to bureaucrats and lawmakers recommending and making policy. By doing so, when consensus emerges about the proper use of generative AI in art, we can ensure that such AI is created and utilized in a way that respects the rights of creative workers….

(SFWA also created an index to members’ posts on the topic: “SFWA Members Weigh in on AI & Machine Learning Applications & Considerations”.)

Discover more from File 770

Subscribe to get the latest posts sent to your email.

Clickity

Here to follow the situation.

Having some thoughts of my own, but not in coherent enough form to post at this time.

Would like to hear them when you’re ready.

Justice Potter Stewart’s famous dicta in Jacobellis v. Ohio, 378 U.S. 184 about obscenity, “I know it when I see it” is not a sound way to assess AI procedures. Some of the time now, and as we go along much if not most of the time in the future, people won’t know AI when they see it. We can see the confusion already in the discussion in the story. AI as generally defined these days is already here and has been for some time. It is expanding and will continue to do so. One is not going to be able to continue to use modern software while having an absolute ban on AI. The market provided by fiction writers is vastly too small to have any influence on how software for the general economy is going to be shaped. The idea that many of these people don’t realize that they are already using AI-assisted software and therefore don’t understand what they are asking for when they still want a total AI ban is troubling. The elements of GenAI that are most threatening can still largely be distinguished, so that presents a viable target surface for process now, but good policy is not going to be constructed by people that don’t understand the basics of what they themselves are doing and using today.

The discussion sounds like warning signs for organizational fracturing and decline.

I guess it would be worthwhile considering what actual problem people are aiming to solve.

While I think an LLM could, with a great deal of work & effort, generate a piece of short fiction that SOME people (if lied to & told it was by a human) might think was award-worthy…I don’t think people are going to actually make the kind of effort involved. LLM’s can generate mediocre spam writing en-masse, which has proven to be a problem for people who publish fiction but not something that endangers awards. The Stokers don’t need to worry much about an LLM-generated work being a finalist anytime soon.

Diffusion model-generated images are being actively used on book covers and related materials. Additionally, because the use of stock images on book covers has been a common practice for many years, the influx of generated images on stock image websites means there are additional routes for such images to end up in book or magazine covers. The chance that a book, story etc is nominated for a Stoker AND has a cover or illustration that are partly machine-generated is much higher.

Maybe focusing on the generated art work issue is a more immediate priority. It is also the issue more directly impacting people’s paid work currently.

So now, using Grammarly (and similar programs such as ProWriting Aid) is considered as bad as using generative AI? Stop it. Just stop.

Yes, a lot of these programs now have (some) generative AI capabilities. That doesn’t mean everyone who uses those programs is using AI. I use the free version of Grammarly. I’m pretty sure the cheaper version of ProWritingAid does not provide generative AI (or provides limited changes).

Are we going to start saying that people who use Grammarly aren’t “real writers”? That reminds me of a Threads post that claimed people who use Canva aren’t “real” graphic designers — never mind that real graphic designers do use it.

I’m not using Grammarly to create text. I’m using it because it occasionally finds something I missed. And because I know enough about writing and editing to ignore the vast majority of its suggestions.

I have written first drafts of articles in Notepad — which has no spelling or grammar capabilities at all. When I paste the text into Word, I often find that I have very few typos. So I think I know how to write and spell and all that. But before sending something off, I also want to have an extra tool to find obvious errors.

For longer pieces, I would rather hire a human editor. But I want to use something to do a quick check before sending it to that editor. Sort of like vacuuming before hiring a housecleaner. 😉

For good or ill, at this point in time, AI is inescapable. To eliminate and disqualify AI-assisted tools that touch a creative work as well as publication venues that accept any version of work that may have used AI during the creation process will be very, very tricky for award administrators – if not impossible. Most creatives do not truly understand how and where AI is being used and how it has already infiltrated nearly every aspect of what they do, where they publish, and where they sell their works.

Therefore, we are going to have to find a different way to think about the guardrails that need to be put into place that won’t accidentally disqualify everyone in the process.

If we keep thinking about AI in the very broad strokes that we are thinking about it when writing rules, we will find ourselves in a very precarious position of disqualifying everything and everyone because so many of us do not understand AI, what it really is, what is does, and where/how it is being used…and who is using it.

Organizations and people are going to paint themselves into a corner soon if they disqualify AI-assisted works under blanket statements. AI is so much more than just “spell check” and “word suggestions.” Every major writing platform will soon be incorporating some form of AI into their software systems because they are built for businesses that are focused on streamlining work and empowering workers, not for creative writers.

AI-assisted also includes speech-to-text. So, if you use narration at all during your writing process, any content you speak and have automatically transcribed into text would be disqualified.

If you use lookup features during your research such as Chrome, Firefox, Explorer, Bing, etc, they ALL use AI. They are all starting to incorporate forms of generative AI into their results. So, the act of conducting internet based research naturally includes AI in the results you receive. So, if you copy and paste any of that information into your notes and then use that content in any way, you are using data that was generated in some way by AI.

No Hollywood produced script, film, TV show, etc would ever be allowed for any award ever because AI will have been used in some aspect of the final work produced. This is an inescapable fact at this point, not just because AI is now being used to evaluate scripts for green lighting, editing, and writing to some degree, but because digital visual effects and CGI are by their very nature AI. However, this would also include anything created using 3D printing, online marketing (because it’s all algorithm driven), and digital distribution because Netflix, Prime, AppleTV, etc are all AI first organizations that could not operate without AI managing their data streams.

There are so many other uses of AI by people (that they don’t even understand or know about) that creatives will get caught up in as well as publishers. It will become increasingly difficult to conduct any kind of awards vetting process, and finding qualified volunteers to conduct these processes will also become increasingly difficult. Thorough reviews and applications by potential nominees will have to be conducted, and that process may become invasive in order to prove that AI was never used or touched in any way by the creative.

It’s also important to understand that any digital artist is likely to be using AI tools, even if they are unaware of it. For example, most people understand that Photoshop now has a featured that allows for generative AI content creation and fill. It also uses AI in other ways from filters, lighting, textures, patterns, colors, and palettes. Auto selection, quick selection, and masking tools are AI driven. Facial recognition to identify people in artwork in order to select them or to select their inverse is AI driven. AI algorithms are also used in scaling images, changing resolutions, and noise reduction. Then again, there is the obvious uses of AI that everyone thinking about like GANs or the use of platforms like Midjourney, DALL-E, etc. We are pretty close to the point when the only art works that will be eligible are traditional works of art created by hand with paint, ink, clay, etc.

One thing people are not talking about AT ALL is the use of AI generated voice. Everyone is talking about authors and artists, but no one is talking about voice actors. Publishers are turning more and more to AI generated voices for audio books. If a publishers uses an AI generated voice to produce an audiobook, it is using generative AI, not just AI assisted tools. Therefore, that publishers’ content across all channels would be disqualified.

And, we also have to think about publishing venues. Amazon publishes AI generated work, not just AI assisted work. There are people who are using generative AI tools to outright WRITE their books. They then use AI tools to edit and refine their books. They use AI voices to turn those books into audiobooks, and they use AI art for their covers. Amazon is the single most powerful book publisher on the globe. They use AI in EVERYTHING they do, not just allowing AI generated content to be published. They use AI algorithms in their marketing, in the internal sales strategies, in their building designs… it’s everywhere. So, with the strict rules that creatives are wanting to enforce about publication venues, any book published or sold through Amazon would be disqualified from an awards. This will impact EVERY writer, EVERY artist, and EVERY publisher creating anything.

And this applies to big brick and mortar bookstores, too. They use AI within their stores for purchasing, mapping and tracking stock, watching customers for shoplifting, and in their online sales.

Then, of course, AI by publishers who use Adobe, Canva, etc, are using tools that incorporate AI in book design. They are using spell check and autocorrect. They are using digital optimization for covers and layouts, AI to monitor rights and legal issues, ad campaigns, social media, predictive analytics for marketing, and in some cases translation. Some of them are also starting to use AI to help with fact checking and plagiarism.

There are so many other using that I can’t think of right now that touch the creative industry and fall into the AI-assisted tools category.

I think it’s time to have some real conversations that don’t start with “Fuck AI” because that isn’t helping us get to solutions that will protect creators.

We need to truly understand the uses of AI, how organizations are using it, and where the practical lines are that will allow creatives to continue creating with confidence that the work they are writing, drawing, voicing, etc … and selling … won’t be disqualified because of something that is utterly beyond their control to manage. This is a big discussion that is going to require nuance and understanding because the question is no longer AI or no AI because our world is AI powered whether we see it or not. This is not a recent change. AI has been building for decades and we are finally starting to “see” it and how it impact us now, but there are so many other ways that it is impacting us that we don’t understand and don’t see … yet.

Let’s really think about how we design our community and our rules in such a way that our people can more easily navigate these spaces with confidence when creating today as well as in the future.

Who is using AI spellcheck? Also it is perfectly possible to use old Word or an open source word processor that just checks a word against a list of words and no AI trash.

@ima

There’s an article on Microsoft’s website that says, “One of the most popular AI writing tools is something you may not think about as being AI—spellcheck and grammar check.” (Predictive text features also use AI.)

I don’t think writers should feel pressured into using older versions of Word that might not be compatible with their needs just because some people think that using a spell check with some AI features makes them “not real writers.” Those older versions of Word are not updated and can be hard to find and install legally.

Also, I’m not crazy about the idea of going to yet another source (yes, even open source) for spell check gives me hives. How do I know they won’t start using AI, too? How do I know that open source program is good enough for my needs? What if they stop updating it?

@Erin Great points!

@ Erin – ” because digital visual effects and CGI are by their very nature AI.” This is simply not true. I’m an expert in this domain, so I feel comfortable in contradicting you outright, but I suspect much else of what you’ve written is also false or misinformed.

@Cliff I spent over 14 years working alongside and with graphic designers. Erin’s statement that you pluck out has been true for well over twenty years. AI is the only way the techniques work that are deployed in packages like Photoshop, Illustrator, Premiere, After Effects, Lightroom, Maya, Blender, etc. If you want to see what non-AI graphics tools look like, check versions prior to the late 1990s.

@Cliff Computer Generated Images today are very different than those of 10 or even 20 years ago. It is easy to get lost in the semantics of what is and what isn’t AI, especially where generative AI is concerned. But that doesn’t change the fact that AI is math at its most basic level. CGI is a mathematical technique used to create models and AI-assisted tools are used in conjunction with CGI. As things are going, I would almost guarantee you that in the near future AI, will get wrapped so completely into CGI processes as to be nearly indistinguishable from it.

And, yes, I admit that I used a wide brush when making my point about the various uses of AI…. but when you get down to it. AI is math, CGI is math, and they both require compute power to run their calculations. Are you really prepared to say that a machine, which is capable of running mathematical calculations at a speed that out paces human ability, is not a rudimentary form of artificial intelligence? Is the point of this conversation to get stuck in semantics about the definition of AI? Or is the point to discuss the potential use cases that are coming and could trip up the good work that people are trying to do to prevent harm to creators?

Also, while I may have taken a few shortcuts in my post to make a point more quickly, you could have just asked me about my qualifications before deciding your expertise with CGI gives you the authority to determine that everything I wrote was likely false or misinformed because we don’t quite align on the semantics of what artificial intelligence is…. anyway, send me an email and I will tell you. erin.m.underwood at gmail dot com

What if an author makes a sale to a magazine which subsequently allows the use of AI-generated artwork? It seems unfair to use ‘guilt by association’ to bar that author’s work from award consideration.

@ Erin – yes, I owe you an apology for that. I got s sense some of your points seemed exaggerated, but i should not have characterised them as ‘false or misinformed’.

FWIW, I do broadly agree with what you and Sean about the need to have a nuanced understanding of the increasing role of AI in the software we use for creative and other endeavours.

I don’t agree that a computer, capable of conducting mathematical operations faster than a human, is therefore to be considered artificially intelligent. Nor do I think that equating CGI and AI is valid because both are based on mathematical techniques.

@ Sean, my understanding is graphic designers usually create still images, while I was responding to Erin’s point about CGI and visual effects in movies. With that in mind, I spent the last ten years in a senior and more recently technical leadership role on Pixar’s RenderMan team. RenderMan was the first production-quality renderer used to generate CGI, and is still used today for all of Pixar’s productions, the vast majority of ILM’s visual effects work, and by a large number of effects studios throughout the world (used on productions as diverse as LOTR, The Lion King, Jungle Book). It takes scene descriptions from packages such as Blender and Maya, as well as Katana and Houdini, and computes the light transport to generate the images you see on screen.

https://graphics.pixar.com/library/indexAuthorCliff_Ramshaw.html

I can tell you that, up until very recently, RenderMan featured no AI elements. Over the last couple of years the team incorporated an AI ‘denoiser’ developed by Disney Research which enables renders to finish more quickly before they are fully resolved.

Prior to Pixar, I worked for seven years at ILM, developing rigid-body and fluid simulation software, as well as working on the company’s in-house digital contention creation package, Zeno, which is similar in functionality to Maya or Blender, in that it supports geometry creation, light rigging, animation, material definitions, simulation etc etc etc. This was from 2007 to 2014. At no time was there any AI of any sort in Zeno or any other piece of software used by ILM to create, for example, the visual effects of recent Star Wars among many others.

@Cliff no worries. 🙂 I have a bad habit of letting my brain live in the future when it comes to AI and emerging technology because it’s my job to imagine how it is and will be used as well as who it will impact in order to help industry leaders to understand its impact upon their people and their organizations.

I also appreciate that you took the time to post your original note. It’s really important to talk about these things and disagreement and miscommunication and understanding are all parts of that process to ensure that we find the best path possible as we go into the future.

I’m going to send you a quick note over on LinkedIn. I hope that’s okay. Thanks!

Hey Erin – I appreciate the discussion. I’ll respond you your LinkedIn message.